When code review meetings get derailed, they become painful and unproductive. We will cover in this post how to make them focused and effective sessions by eliminating DONTs and doing more of DOs. Though the post focuses on the .NET environment, the principles apply to any object-oriented environment.

A process cannot be a substitute for a skill, but can enable continuous improvement.

Software design is a team effort. As the code is developed, a developer makes many design decisions on a daily basis such as adding methods, creating associations between classes, use of switch statements and so on.. Whether these are good ones is a different problem. When a team comes to embrace this reality, it finds a need for the different type of technical leaders. For example, Architectus Oryzus is such a leader that enables team design activities while acting as a guide when needed. Martin Fowler writes that a guide is a more experienced and skillful team member, who teaches other team members to fend better for themselves yet is always there for the tricky stuff.

Depending on skill levels and understanding of the business domain, developers make bad decisions. You will miss teachable moments if you try to avoid them by taking control over the design. The reviews present these teachable moments. Pair Programming is another such practice that presents the opportunities for mentoring. Both the reviews and the pairing improve collective code ownership.

Code Consistency, taken care by individual programmers, helps the team to focus on the design and the functionality. Automate the consistency related guidelines as much as possible.

You will see how code reviews become focused with the little effort. You will find practical guidance on continuous improvement as essential skills need learning and practice.

Take care of typos

Tools such as Respeller, a R# plugin, can help in finding the typos as they happen. It checks for misspelled words in comments, strings, and identifiers- classes, methods, variables and so on..

If needed, you can ask for a quick review from one or two people. Early reviews of public contracts prevent misspellings from reaching the world.

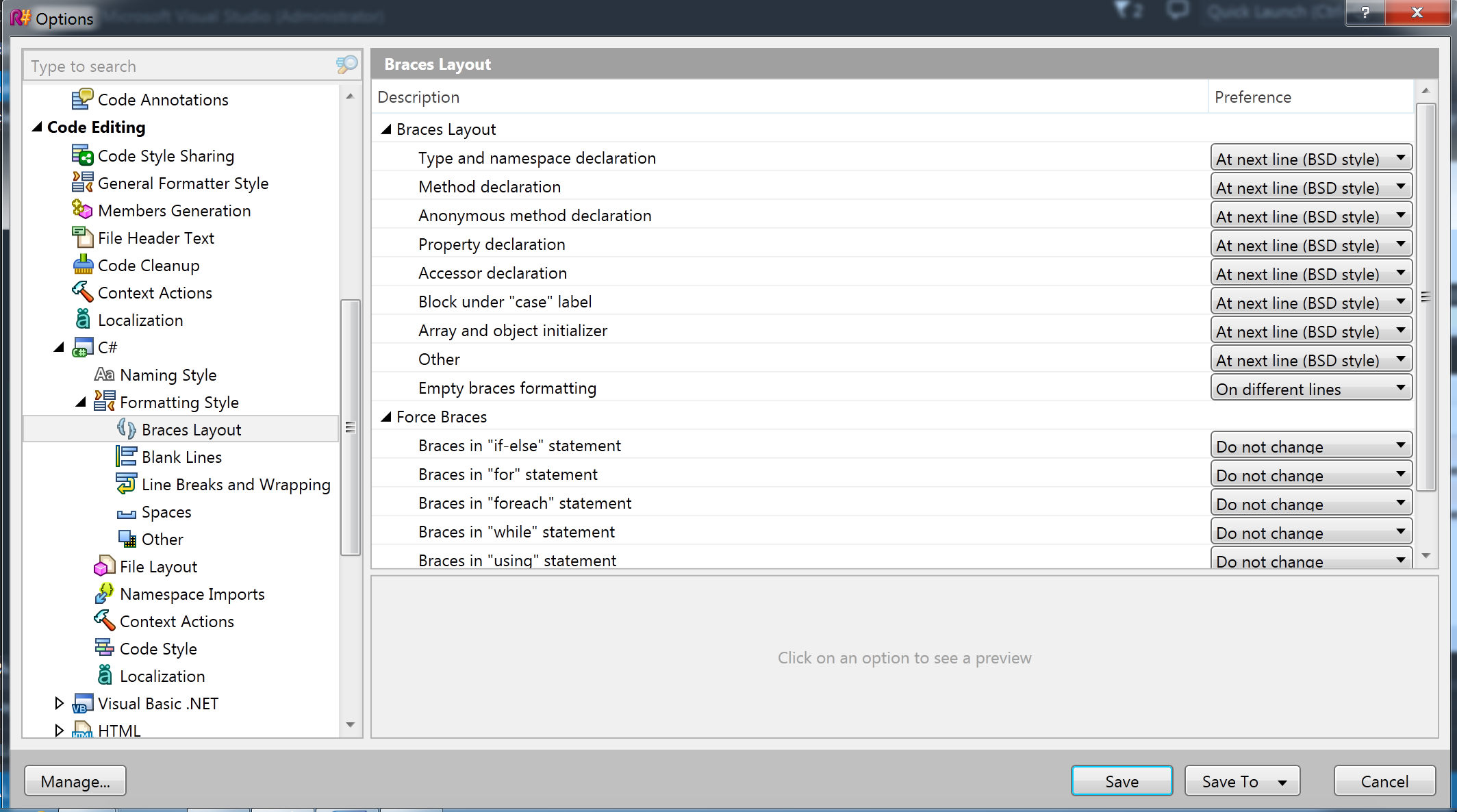

Fix code formatting issues early on

Formatting issues such as indentation, blank lines, and spaces irritate the team. R# formatting feature works well in cleaning up the formatting.

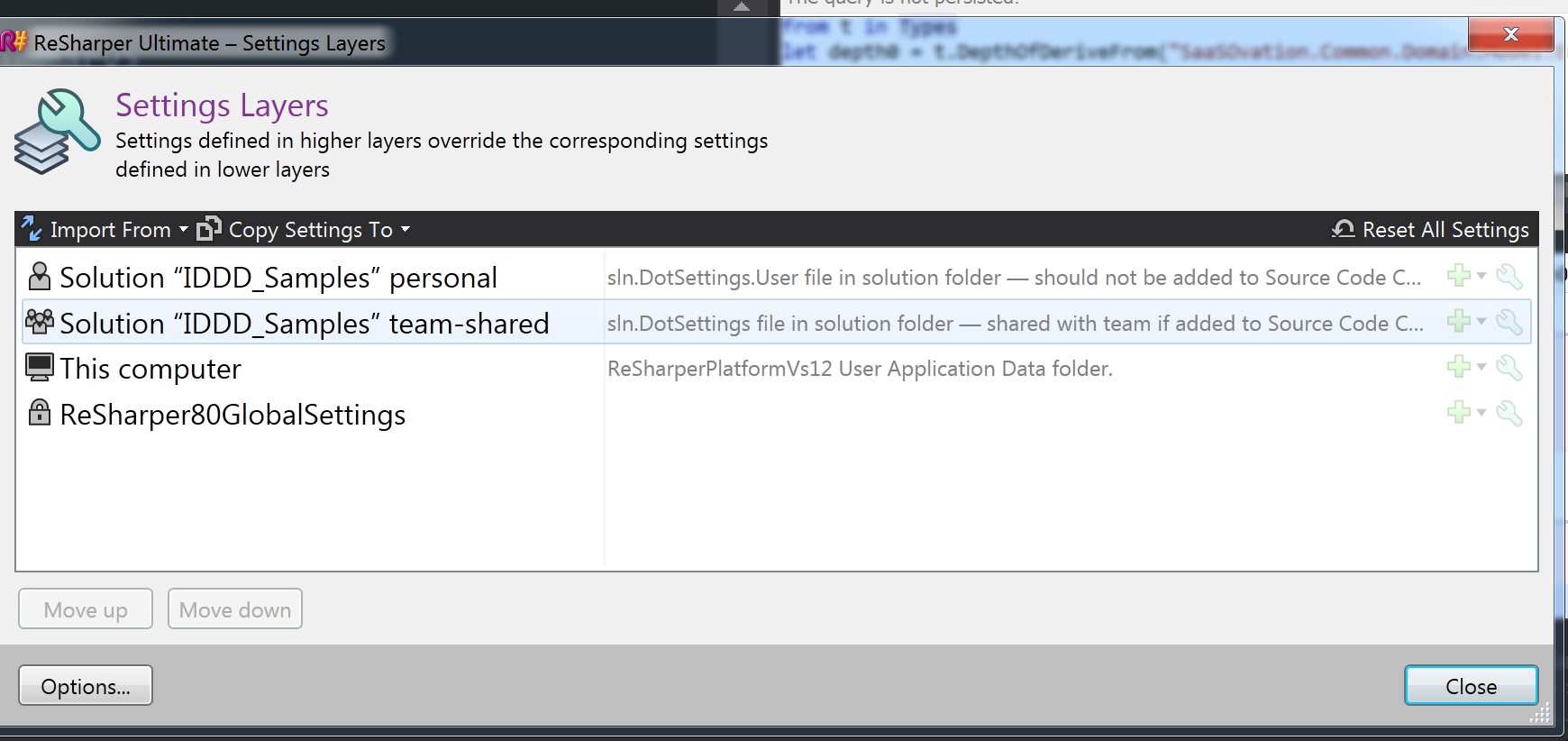

Team level R# settings help in maintaining consistency.

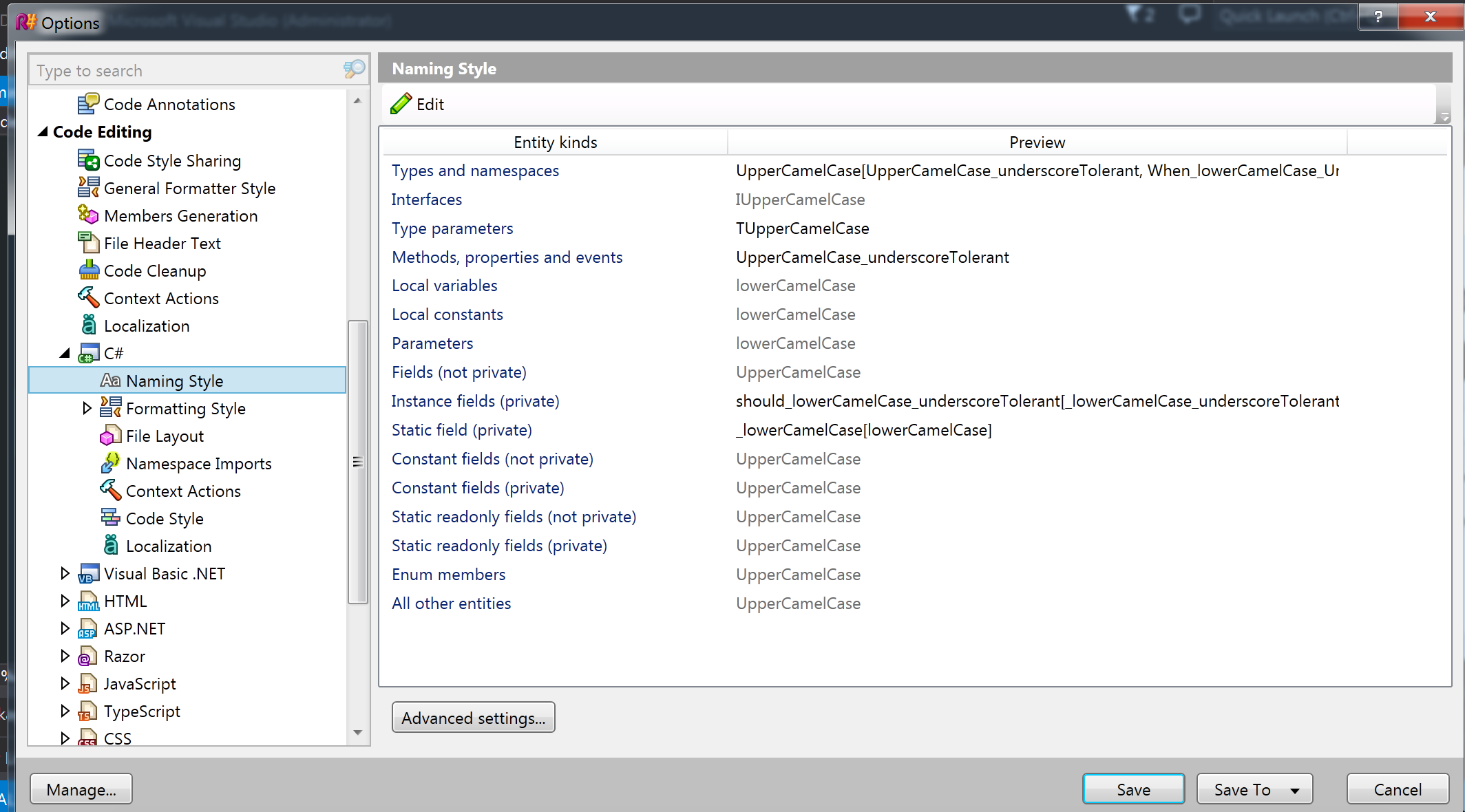

Document naming conventions and coding styles

It is important to compile the coding guidelines at the beginning of the project. Framework defined guidelines are a good starting point. Even if an enterprise-wide document is already available, the team still should go through it. The collaborative effort promotes collective code ownership. Code consistency depends on the buy-in from the entire team; otherwise it becomes the focus of the code reviews making them inefficient.

Achieve consistency

There are tools you could use to create shorter feedback loops. R#, with extensive rule sets and auto correcting capabilities, tops the list.

You can customize default naming styles and share them across the team using the custom profiles. Stylecop users can benefit from R# auto fixes using Resharper.Stylecop plugin.

Document general coding guidelines

Teams compile general coding guidelines, DOs and DONTs, such as preferring exceptions over return codes, implementing IDisposable if a class contains disposable fields. Generally it is a best practice to make expectations explicit to avoid rework. You can refer heuristics in Bob Martin's Clean Code book. People at csharpguidelines.com compiled a set of coding guidelines including some best practices, and the R# settings targeting these coding styles.

Achieve consistency

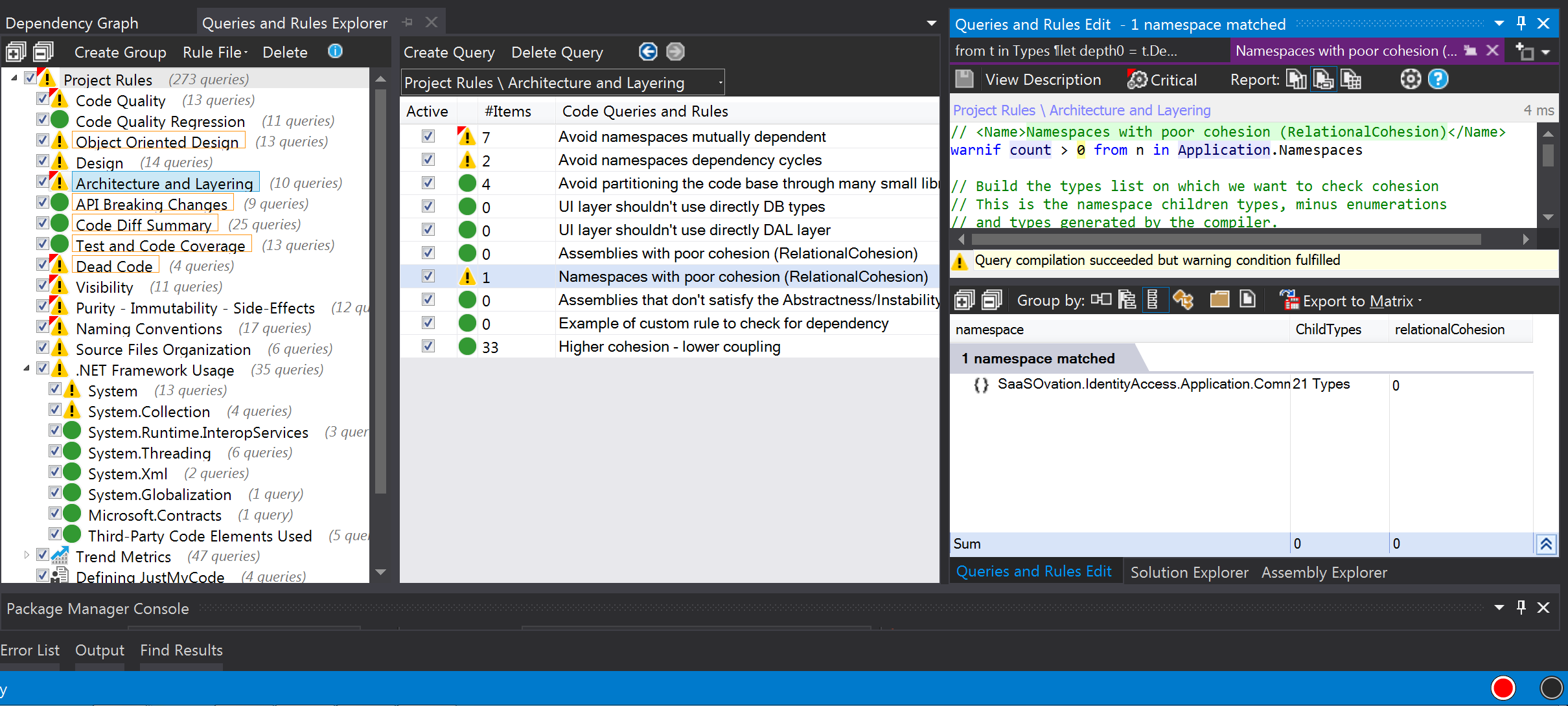

As with the styles/naming conventions, the team should strive to write code following the heuristics. Teams waste enormous amout of time in enforcing the guidelines through the reviews. A tool is helpful if it reminds you about these guidelines as you write the code. NDepend comes with a set of code rules. You can write new or customize them. As everyone in the team may not know all the guidelines, you could start with what the team can follow and then you could drive continuous improvement over few iterations. Iteration/Sprint goals are best suited to learn and practice these rules before enabling them. It helps to start with green NDepend's status circle (in the IDE status bar). Over few iterations, you can add rules progressively as the team gets comfortable.

This immediate feedback reduces the knowledge gap and reminds people when they take any shortcuts. If the developers use all they know that itself is a big improvement.

Code commenting

It is hard to maintain the quality of the comments if you enforce it through the review sessions. Ad-hoc comments lack consistency whereas a Samaritan effort is suboptimal. It is a challenging task to keep the comments alive throughout the project cycle.

The team should name the things to improve the code readability. You should add comments where necessary explaining why. Redundant, superfluous comments are such a waste of time. A Team that focuses on the readability of the tests and the code gets better ROI. One side-benefit of TDD is that the tests become a reliable documentation. Intention revealing tests along with good documentation help your code users outside your team.

If the code is the design, then tests are the best documentation you could give.

GhostDoc plugin automates the routine tasks of inline XML commenting.

It is necessary to make the strategic decision early on, and the team should strive to achieve consistency throughout the project. There is no free lunch when it comes to commenting. The team should keep this effort in mind during estimating sessions. Consistency is the key.

Avoid Premature Optimizations

If there is one thing you should keep out of the reviews, that should be premature optimizations.

"Programmers waste enormous amounts of time thinking about, or worrying about, the speed of non critical parts of their programs, and these attempts at efficiency actually have a strong negative impact when debugging and maintenance are considered. We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. Yet we should not pass up our opportunities in that critical 3%." - Donald Knuth

Don’t let the team fall into guesswork. Get the story done before carrying out optimization efforts. Most often, the optimizations efforts are started by defects. But SLAs should drive the performance efforts. SLAs are constraints on the stories. Find them out early in the project and make them public and visible in the team area. Use performance stats and profiling to find the critical 3% effort.

To avoid uniformly slow code, the team can compile the platform-specific performance idioms and add them to the guidelines document. Using StringBuilder for complex string operations is one such item, for example. Iterative development makes it easier to spot any such code behavior before going too far.

Now comes the meaty stuff that requires effort to learn and practice to gain expertise.

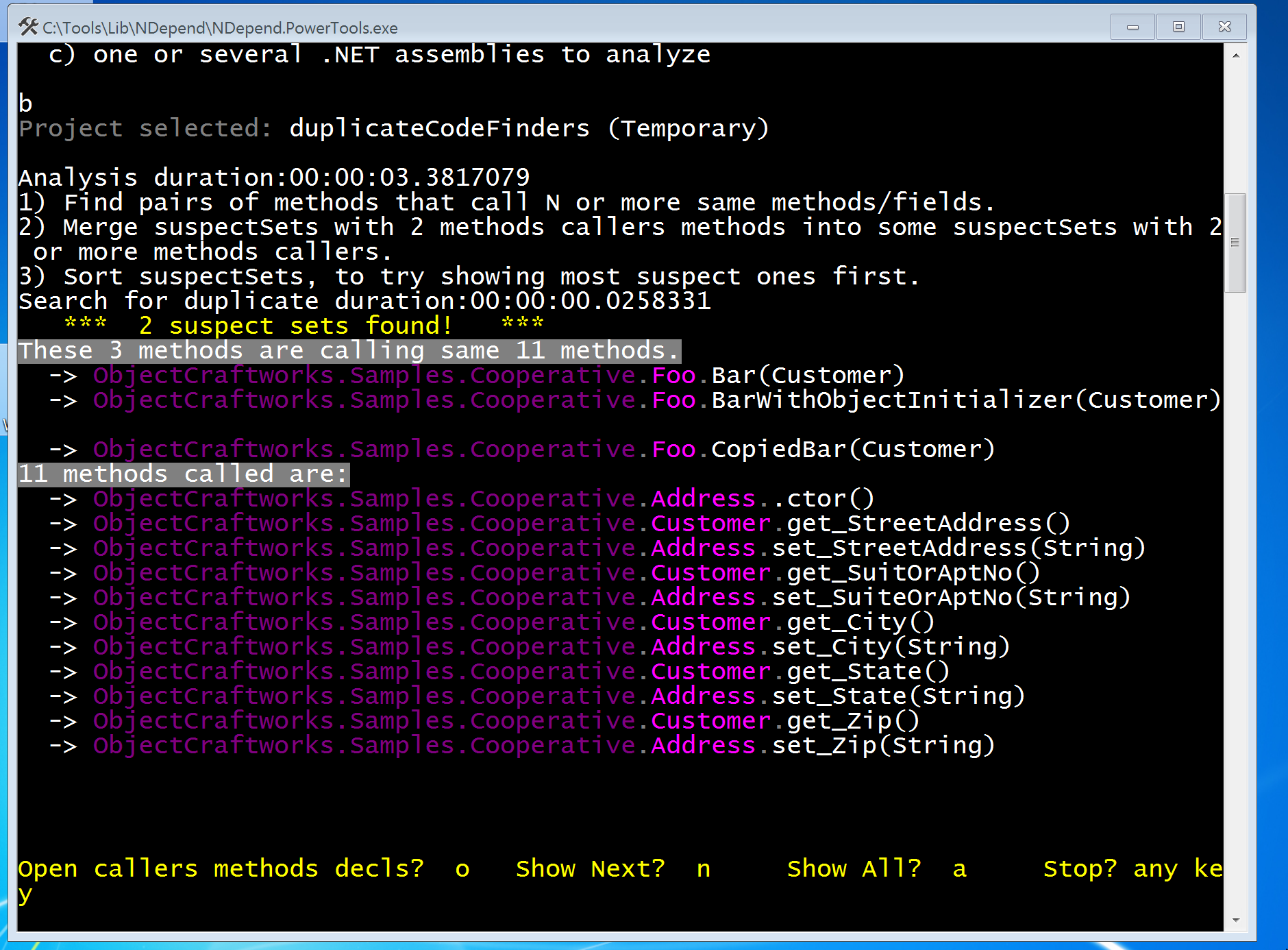

Take care of code duplicates early on

Code reviews are too late to find the duplicates. See my post for how duplicate finders(as shown below) assist developers as they write the code.

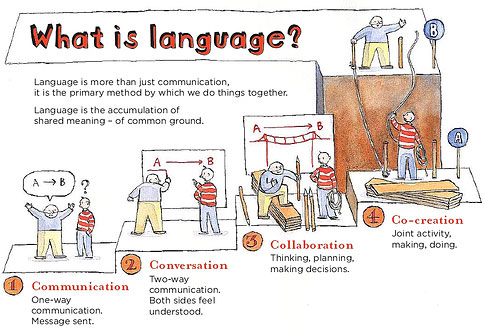

Develop Shared Vocabulary

Arbitrary language creates conflicts making the meetings painful to attend next time. The team should guard against language ambiguities such as semantic diffusion, and flaccid words. It should put effort to come to common understanding of the key vocabulary. For example, the word "Refactoring" is being used to describe various things, weakening its intended use. Martin Fowler calls this RefactoringMalapropism.

The team that speaks shared vocabulary is set for the success. The power of shared vocabulary can do wonders in other areas too.Dave Gray created this wonderful graphic.

For better code reviews, the team needs to develop the vocabulary to talk about the issues and their available remedies. Luckily, You need not reinvent the language, as, since XP, there are several sources. Code smells, design smells and the refactorings that fix these issues are a good starting point. SOLID principles can help in refactoring and designing activities. Once you gain the expertise in the design patterns usage, You will have a rich vocabulary.

Martin Fowler covered about code smells in his Refactoring book. This vocabulary helps in communicating the code troubles, and the appropriate refactorings.

The team should gain fluency. A culture that supports the expressions in such rich vocabulary is essential to agile maturity. The culture should be mindful of the mixed skill level. The leadership should enable the environment for experiential learning. With such common understanding, the team organically opts for the pair programming.

Here are few examples of expressions:

When you see minor changes to several classes, you could say

Looking at the changes you just made, we have this behavior all over the place. It is a shotgun surgery. Let's inline this class.

When a class is too much dependent on other classes:

With this change, class Foo has just become a feature envy. Let's extract this part and then move it to that class.

When someone overzealously applied OCP principle:

This instantiation does not need a separate factory now. We can take the first bullet, and wait for the actual need. One less indirection is always good.

As you see this is much more effective than what you hear typically I like this, or don't like that. Once a team gets comfortable with the Refactoring Catalog and the awareness of code smells, its code reviews become much shorter and effective.

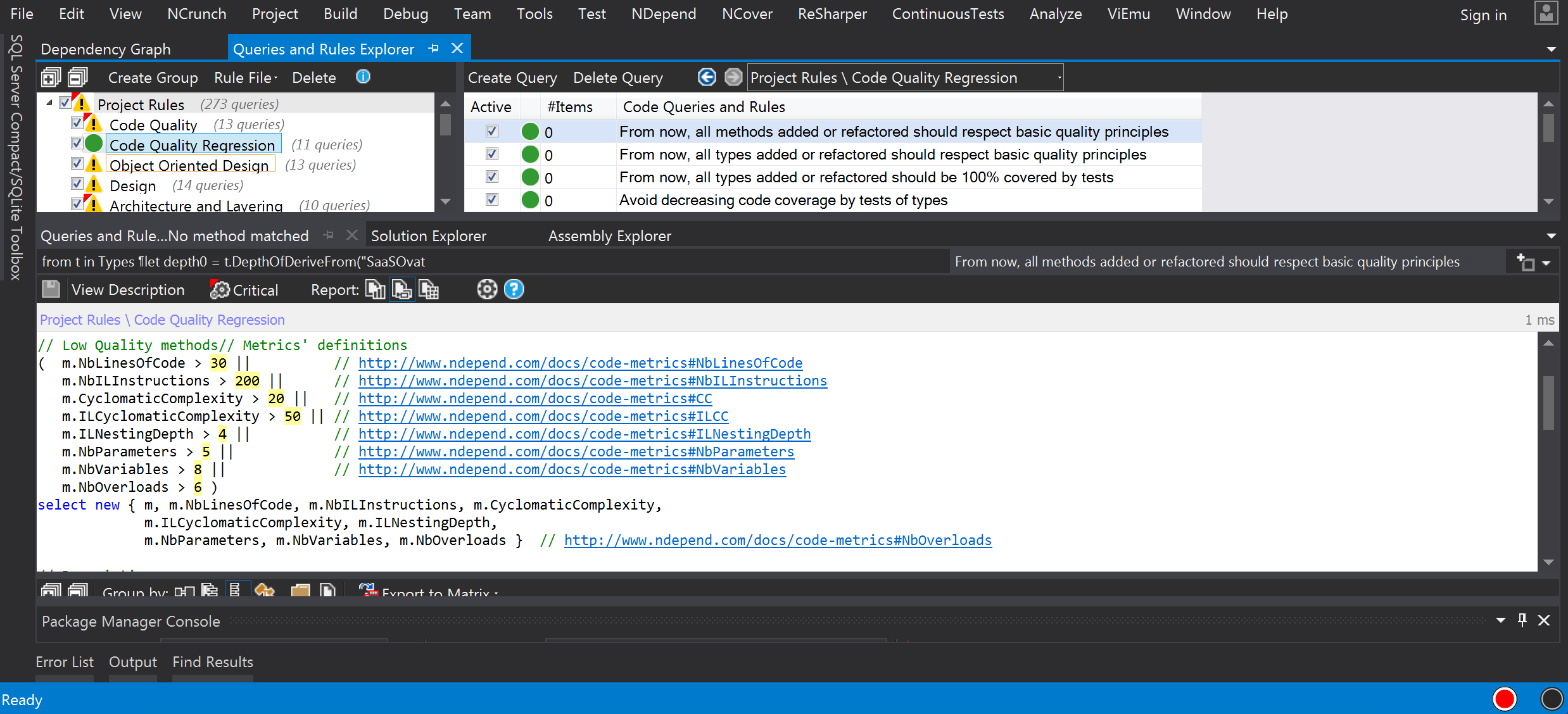

Manage code with metrics

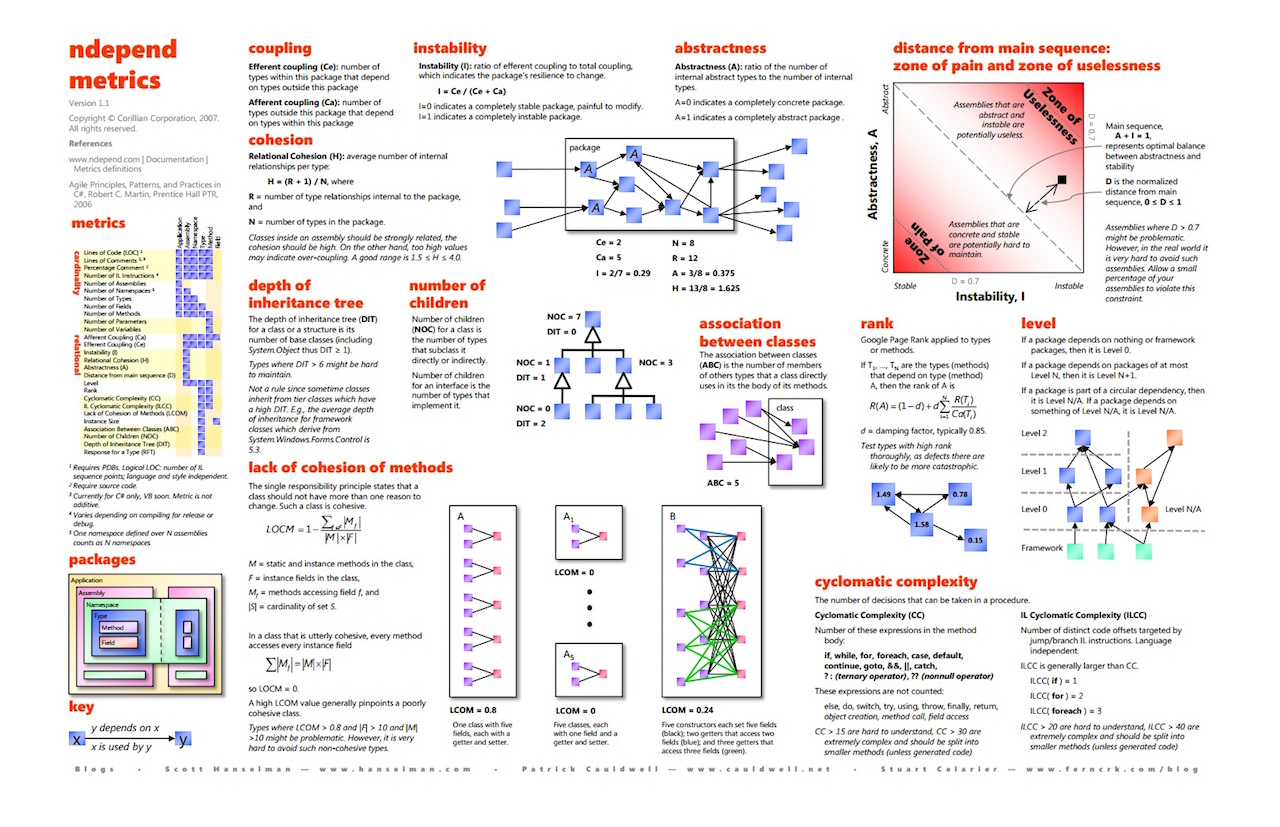

As information measurements, code metrics give us a useful view of the codebase. Code Metrics based vocabulary makes the code quality a team activity.

NDepend is a handy tool to calculate the metrics. With this tool, you can create the shorter feedback loops by turning expectations into code rules. Its status circle, which is in the IDE status bar, will turn red as soon as a violation is detected. You can also turn these code rules into critical rules to fail the build whenever the metrics cross the thresholds. For example, you can write a code rule to fail the build when LOC of any method crosses a threshold value let’s say seven.

Here is a metrics placemat for your reference.

Don't let the bad code pile up

The refactoring in a brownfield project can overwhelm, no matter what you do, there will be code smells. If you allow them to happen, the code further deteriorates. As Broken Window Theory states, bad design piles up. Any time we take a shortcut, we lose an opportunity to hone our skills.

One approach that works well is "from now onwards". The idea is simple; you would create a baseline to track the quality of the code for all the present and future code changes to make sure you are not making it worse. Continuous improvement becomes fun and brings motivation from the job satisfaction. It creates positive reinforcing loop.

You can use NDepend to create the baseline. With metrics, code rules make from now on goals measurable. For example, the goal such as the distance from the main sequence, measuring stability and abstractness, of module X, should not exceed standard deviation of 1, is easy to measure and track.

Wrap-up

Items we covered that make code reviews focused and effective:

- Embrace the design as a team activity and create the culture of continuous learning.

- Document coding guidelines, naming styles, formatting, and performance idioms.

- Don't wait for the code reviews; Take care of typos, formatting, naming conventions, general coding guidelines, and code duplicates, as the code is being developed.

- Use tools to achieve consistency and to let the machines do the dirty work.

- Avoid premature optimizations. SLAs, profiling, and performance tests should drive optimization efforts. Make SLAs public and add them as constraints on the stories.

- Set expectations early on the project and aim for shorter feedback loops. For certain activities, the code reviews may be too late.

- Develop a shared vocabulary for the design activities. Code smells, OO metrics, the refactoring catalog, and the design patterns are the great language sources.

Attribution: Thanks to Prashant Menon for the ants team play image.